In Bacillus species, the signal peptide (SP) efficiently guiding the protein secretion is crucial for production, yet there is still a lack of reliable computational tools to accurately predict its efficiency. SecEff-Pred is a novel web server that leverages an ESM-2-based predictor. Enhanced by an innovative data simulation strategy and a multi-task learning framework, SecEff-Pred accurately predicted the secretion efficiency of signal peptides in B. subtilis. The server demonstrated exceptional performance, achieving prediction accuracies of 85.59% for α-amylase, 81.58% for alkaline xylanase, and 74.68% for cutinase. SecEff-Pred was further validated using phospholipase D (PLD) as a reporter protein, demonstrating high prediction accuracy for signal peptide efficiency (overall 72%), with an accuracy of 80% for "efficient" SPs (corresponding to a maximum PLD activity of 929 U/mL) and 62.50% for "inefficient" SPs. These results confirm the SecEff-Pred is a powerful tool for guiding protein secretion in B. subtilis.

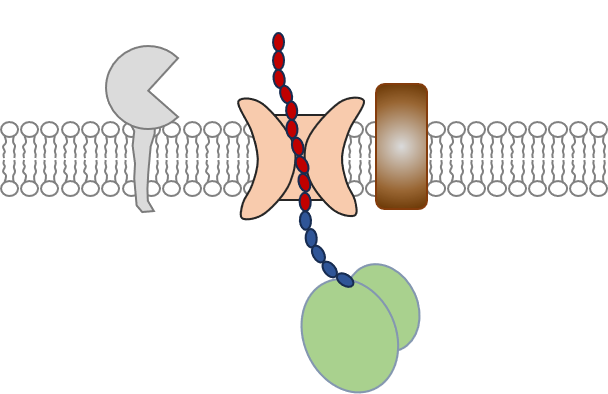

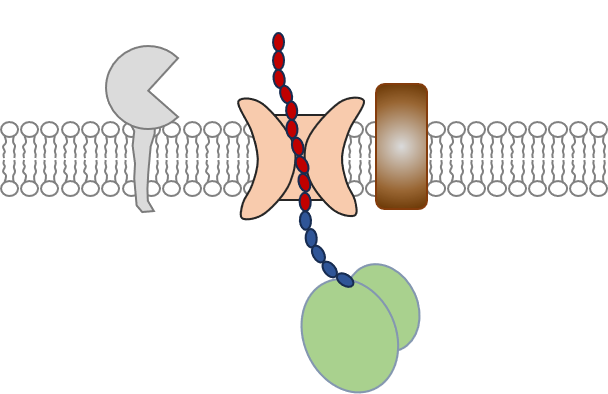

The prediction model is developed based on the ESM-2 framework. For an input amino acid sequence, an embedding layer first maps each residue into a high-dimensional embedding vector, with positional encoding incorporated to preserve the sequential order. These embedded representations are subsequently processed through a stack of 33 Transformer encoder layers. Within each layer, the sequence features are iteratively refined via multi-head self-attention mechanisms and feed-forward neural networks. Upon completing the final encoder layer, average pooling is applied across the sequence length dimension to aggregate the information into a fixed-length 1,280-dimensional feature vector that represents the entire sequence. This unified representation is then passed into a multilayer perceptron (MLP) to perform the final regression task for predicting secretion efficiency.

Article is ongoing.